As of 2 August 2025, core obligations for general-purpose AI providers apply in the EU.

New GPAI models must meet transparency and copyright duties now; models already on the EU market before that date have until 2 August 2027 to comply. The European Commission paired the start date with a public training-data summary template, interpretive guidelines for GPAI providers, and a voluntary GPAI Code of Practice to help teams show their work. The Commission’s own summary confirms that GPAI obligations started in August 2025, and independent timelines track the two-year grace period to 2 August 2027.

Deal context matters: the Commission publicly held the line on dates despite calls to delay, which means buyers now expect evidence over promises inside the data room. See the schedule confirmation reported by Reuters.

Table of Contents

ToggleWhat Actually Started: Obligations in Plain English

The August switch flipped two big requirements.

First: baseline transparency for all GPAI providers. Expect to produce technical documentation, instructions for use, and a public training-content summary that enables copyright checks. The Commission’s GPAI obligations materials and the disclosure template announcement explain what good looks like, and law-firm primers recap the practical effects as GPAI obligations come into force.

Second: a higher bar for models that present systemic risk. There is a compute indicator on the order of 10^25 FLOPs plus qualitative signals. When a model crosses the line, providers must notify the EU AI Office and implement added safety and security controls. The formal threshold and the “notify within two weeks” rule are spelled out across the Act and the Commission’s guidance.

What Buyers Will Now Expect Inside the Data Room

Think “show me,” not “trust me.”

Organize the room so each claim maps to a rule, a log, or a policy. Use the sections below as a request-and-response blueprint, and host artifacts where access can be monitored and exported using CapLinked’s document management and security features.

1. Transparency and Copyright Compliance

Buyers will look for a training-data summary for each model family using the Commission’s template. Link that summary to a licence matrix and any opt-out handling. Pair it with model-family instructions for use and technical documentation that reflect known limitations and intended use, aligned to the Commission’s GPAI guidance.

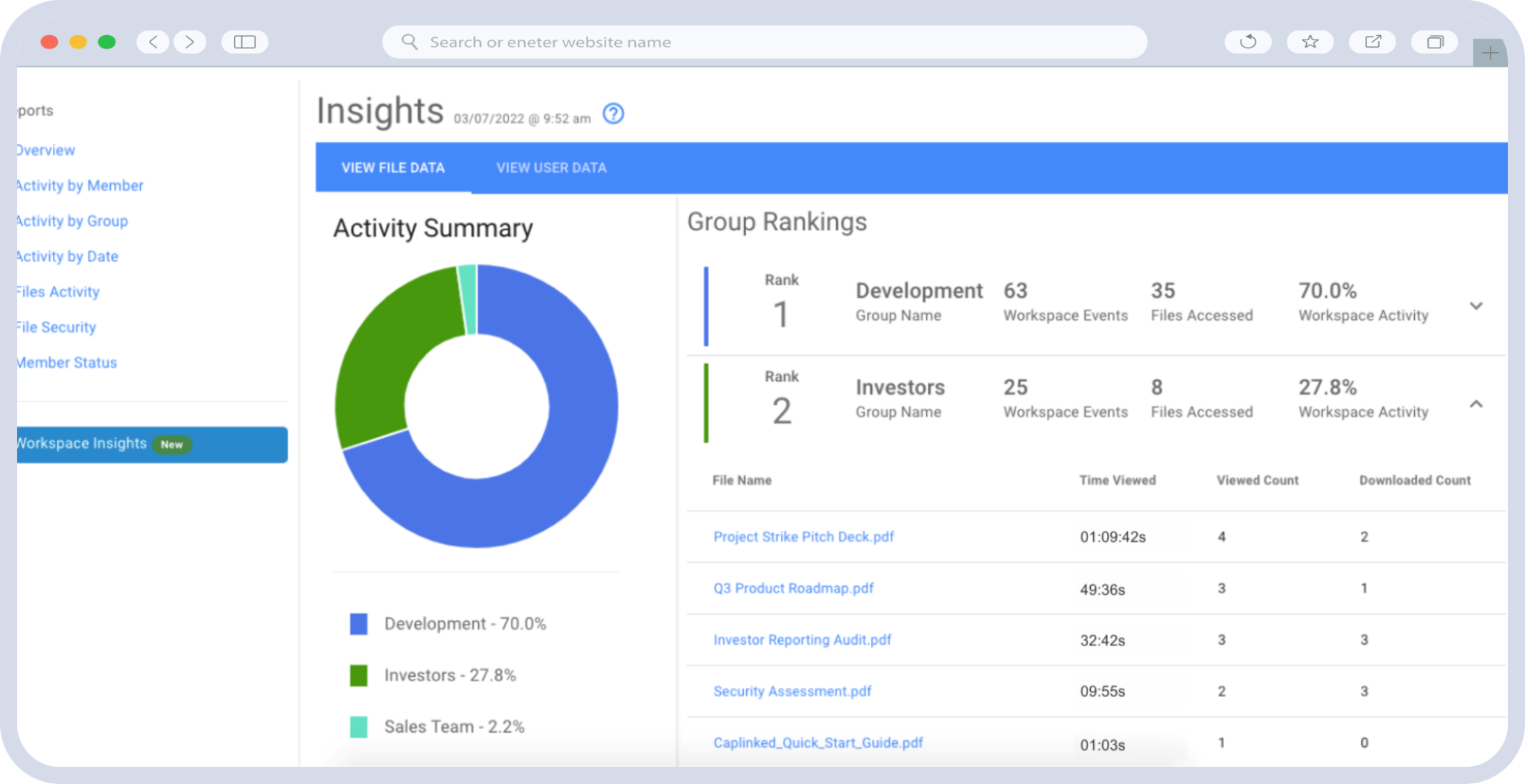

Keep these materials in a workspace where activity is tracked and exportable: CapLinked’s Activity Tracker feature supports report exports for counsel and the board, and the document management view keeps related proofs adjacent.

2. Systemic-risk Positioning

Publish a short written determination on whether any in-scope model meets the systemic-risk threshold. Back it with compute logs, training-run summaries, and capability evaluations. If a model qualifies, include notification evidence to the EU AI Office and the safety and security program that follows from it. The expectations on thresholds and timing are captured in the Commission’s GPAI Q&A and reinforced by practitioner summaries of the 10^25 FLOPs presumption.

House the evidence where control can be proven: CapLinked’s FileProtect DRM keeps sensitive evals revocable after download.

3. Governance, Testing, and Incident Handling

Map ownership: name accountable leads for data sourcing, evaluations, safety approvals, and deprecation. Include a test plan that covers capability evaluations, safety tests, copyright filtering, and post-deployment monitoring cadence. The Commission’s guidance for GPAI providers and Q&A outline what documentation should exist and how post-market monitoring fits.

The New Diligence Checklist: Structure Your Data Room for Speed

Your goal: make review fast, traceable, and exportable. Build the room around the obligations in the EU materials on GPAI provider guidelines and the practical steps in the GPAI Code of Practice.

Model Inventory and Lineage

List every foundation model and major fine-tune with version, training dates, training-data sources, and EU exposure. Link each model to its instructions for use and technical documentation that match the Commission’s baseline transparency expectations. Store lineage diagrams and data-flow maps so reviewers can trace from intake to training to evaluation to deployment without opening a dozen tabs.

Training-data Governance

Publish a training-data summary for each model using the Commission’s official template. Keep license proof, opt-out handling, filtering rules, and a register of proprietary datasets adjacent to each summary. Law-firm primers capture the compliance intent behind the template and how it supports copyright diligence; see overviews from WilmerHale and WSGR.

Evaluations and Safety

Include capability and safety evaluations, test cards, red-team takeaways, and mitigations. If a model could meet the systemic-risk threshold: add the AI Office notification and your safety and security program. The two-step standard—compute on the order of 10^25 FLOPs plus qualitative signals—shows up consistently in practitioner summaries such as Lexology’s analysis and in the Commission’s GPAI Q&A.

Copyright and IP

Post a license matrix covering major datasets and third-party content. Add downstream guidance on acceptable uses, redistribution rules, and required attributions—aligned with the Code’s copyright chapters in the Commission’s GPAI Code of Practice and explained in practice notes like Paul Weiss’s memo..

Security and Access Control

Attach access lists for model assets, SSO and MFA coverage, and change-management approvals. Keep exportable access logs ready for counsel and the board. CapLinked’s document management tracks who interacted with which artifact; the support guide shows how Activity Tracker reports export to CSV.

Third-party and Cloud

Organize contracts and DPAs for data brokers, annotation vendors, model hosts, evaluation partners, and safety auditors. Include flow-down clauses for copyright cooperation, incident reporting timelines, and security obligations. Reviewers will ask whether your practices align with the Commission’s provider guidance; make that connection explicit in cover notes.

Communications and Incident Response

Post PR and investor-relations playbooks for AI incidents and model-behavior issues. If the company is public: cross-reference how a material AI incident would be disclosed under the SEC’s Form 8-K Item 1.05 cybersecurity rule and when you would instead use Item 8.01 for non-material incidents, as summarized by the National Law Review.

How a Deal Room Makes This Easy

A strong data room does more than store files: it enforces and proves control.

Exportable audit trails: buyers want access logs that drop directly into diligence binders. CapLinked’s Activity Tracker supports CSV and Excel exports; the help article walks through downloading workspace-wide reports.

Dynamic watermarking: stamp the viewer’s name, time, and IP to deter leaks and trace provenance across bidder groups. CapLinked’s document management details watermarking plus document tracking.

Protection that survives download: sensitive evals, training summaries, and unreleased model cards should not live forever in inboxes. CapLinked’s FileProtect DRM lets you revoke access after download; industry roundups like Expert Insights highlight FileProtect’s rescind-on-demand controls.

Q&A that captures evidence: route reviewer questions to the right SME, answer inside the room, and link to the exact artifact using CapLinked EZ Q&A. This keeps the thread auditable and prevents context from scattering across email chains.

One place for policy and timeline context: pin the latest Commission materials—the GPAI guidelines, the training-data template, and the Code of Practice—so reviewers can verify scope and timing without leaving the room. News coverage has tracked timing friction and industry posture; if you need outside context, link summaries from Reuters and The Verge.

A Practical Workflow for August and Beyond

Start with a one-pager: map which obligations apply to your models now, your systemic-risk position, and where the evidence lives. Use the Commission’s timeline in the GPAI provider guidelines to anchor dates.

Stage training-data summaries by model and version: use the official template, link each summary to license proof and opt-out handling, and keep proprietary dataset registers adjacent.

Pre-answer risk questions: publish capability and safety evaluations, red-team insights, and mitigations. If you are in systemic-risk territory, include the AI Office notification and your safety and security program; the expectations and timing are captured in the Commission’s GPAI Q&A.

Lock rails before inviting bidders: enforce least-privilege permissions, enable watermarking, and share the most sensitive files with revoke-after-download turned on via FileProtect. Keep activity exports handy through Activity Tracker.

Common Pitfalls to Avoid

Policy without proof: every statement needs an artifact. Screenshots help explain; logs and reports satisfy diligence.

Hand-wavy training data: the Commission gave everyone a standard template. Use it; keep license details adjacent.

Ignoring systemic risk: if compute and capability suggest you might cross the line, document why you do or do not and act accordingly. The threshold and notification expectations are set out in the GPAI Q&A and widely discussed in practitioner notes like WSGR’s explainer.

Loose access around model assets: if you cannot export who opened a model card or training summary, expect a longer, pricier review.

Turn August Into an Advantage

The AI Act moved from talk to timelines; buyers now expect evidence that maps cleanly to obligations.

Targets that show how they meet the GPAI duties, explain their systemic-risk position with references to the 10^25 FLOPs presumption, and export access logs on request will clear diligence faster and protect valuation.

Set up a room that proves who saw what and when, watermarks sensitive files, and lets you kill downstream access if something escapes.